Novel UI Design Patterns for AI-Powered Applications

A significant challenge with current generative AI models is their relatively slow speed in generating text. A chat-style interface, such as ChatGPT, handles this delay quite well because users can start reading the response as it is generated.

However, not every AI-powered user flow can be handled by allowing users to read the response as it is being generated. For example, if you want to autofill a form based on some text, you can’t show the output of the LLM.

Choose the Right Model in Terms of Speed vs Accuracy

Generally, larger models are slower but more accurate. If you have a user flow that requires a quick response, you might want to consider a smaller model. As Ryan J. Salva explains in his talk, GitHub Copilot uses GPT-3.5 Turbo for code completion and GPT-4 for chat because code generation requires a quick response to stay in the flow, and in a chat interface, it is acceptable to wait a bit longer for a more accurate response.

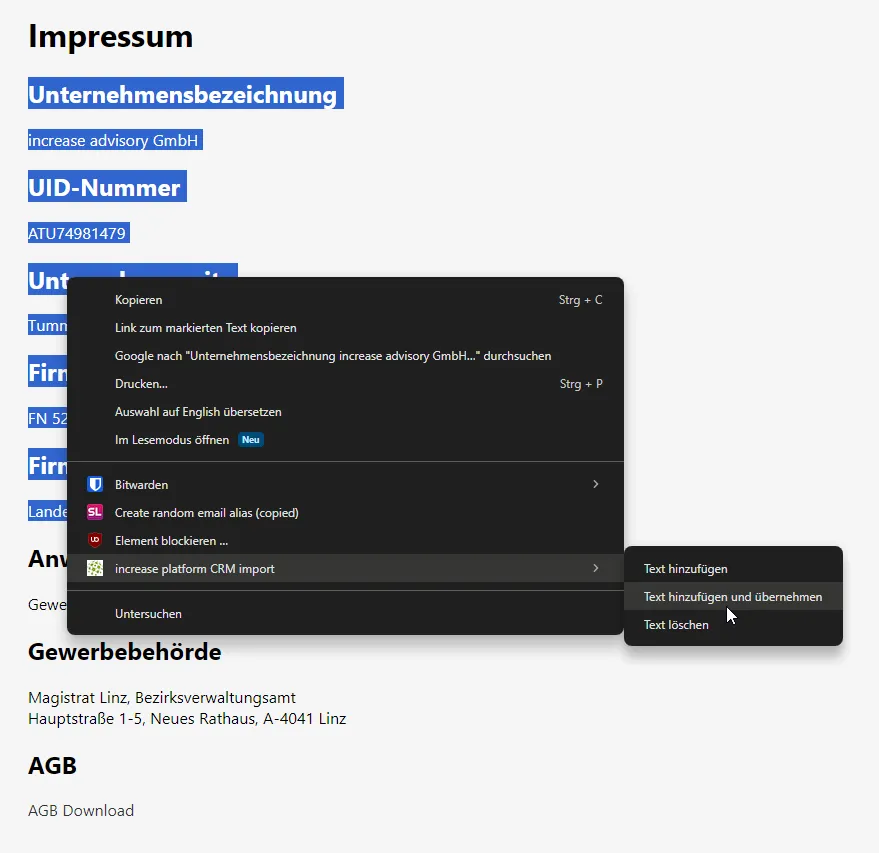

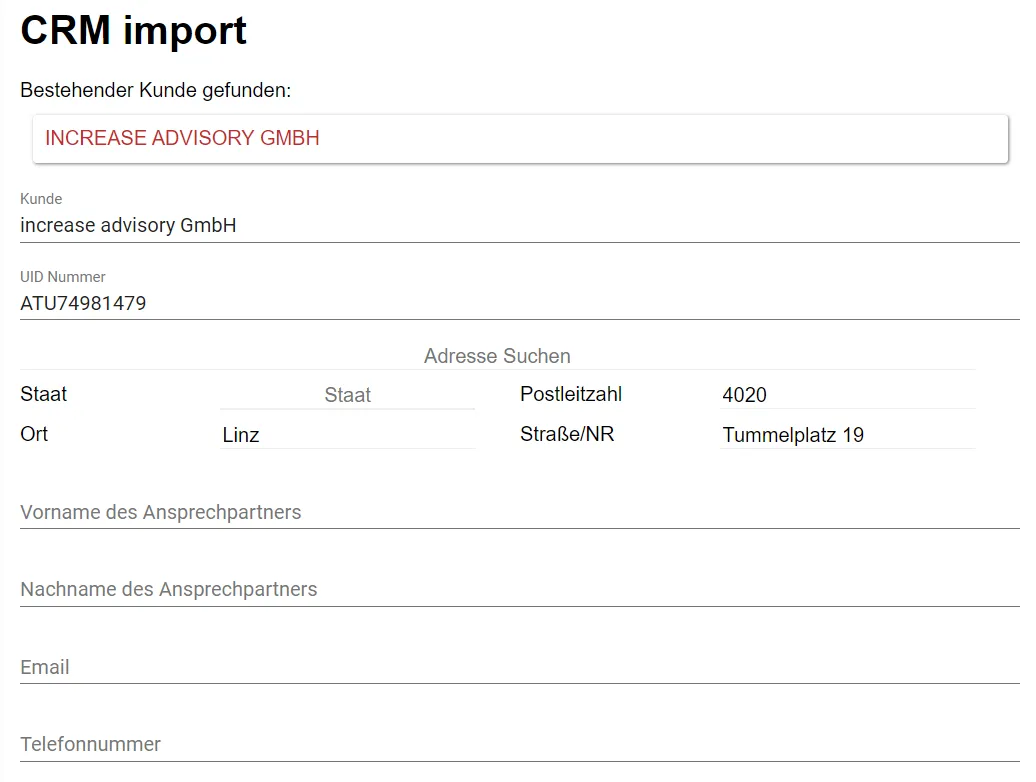

For our CRM import flow, we developed a Chrome extension that allows a user to import customer information by just marking the text on a website and selecting our extension in the context menu.

The extension opens a page of our CRM application in a new tab with the captured text in the query parameter. The CRM then calls our Text API to extract the fields of the create-customer form from the text. This is a time-sensitive application because the alternative to using our extension is for a user to copy and paste the information from the website into the CRM. So, we use a single call to openai/gpt-3.5-turbo for this task to make it as fast as possible.

Our invoice management solution, Belegbar, allows us to build a flow where the user does not need to wait for a response from our AI. Belegbar uses our Document API, which starts by creating multiple different text options from our OCR pipeline. We then select up to six text options and run them through openai/gpt-3.5-turbo to read the data from an invoice to create an EbInterface XML file, so bookkeeping software can import it. Furthermore, Belegbar tries to correct for net prices being confused with gross prices, and vice versa. This is a computationally expensive task.

Allow the User to Move On

It doesn’t make sense for a user to upload an invoice and stare at the screen until the AI has finished processing it. Our solution is to have the user interact with the application itself as little as possible. The user can upload documents directly via the web UI, but also via the web share target API or by forwarding an email with an attachment to a special email address. The user can move on while the processing of the invoice is happening in the background.

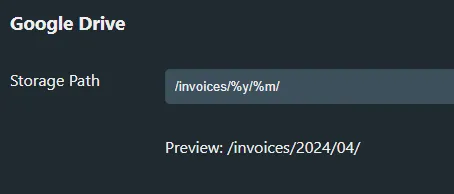

When the processing is done, we offer automatic uploading to Google Drive in a user-defined folder structure. In the case of invoices, the folder an invoice belongs in is determined by the invoice date. Users can define the folder to store the invoices in with a simple templating language that just replaces %y, %m, %d with the year, month, and day of the invoice date, respectively.

Invoices that can’t be sorted automatically can be reviewed by the user at the end of the month. We can simply send them a reminder email with a link to the invoices that need to be sorted. We have built filtering options into the invoice list to view only the invoices that need to be sorted and email the user a link to the invoice list pre-configured with the filter.

Alternatively, we allow downloading all invoices for a time period as a ZIP file.

Both options allow users to open Belegbar once a month while having all their invoices in order.

”Virtual Coworker” Pattern

You can go a step further and build a “virtual coworker” that processes emails or chat messages. Almost all chat applications have a way of building bots that can read messages and respond to them. For example, you can build a virtual coworker that can automatically add an offer to your ERP system when you send it a PDF file with an offer. If the virtual coworker needs more information, it can ask for it or just ping users to finish entering the offer in the ERP system.

Don’t Annoy the User

Keep in mind that a virtual coworker should be as patient as possible. If a task is not time-sensitive, you can wait before you respond to a user. If you need the user’s help for your virtual coworker, try to bundle the corrections that need to be done into a single interaction. In Belegbar, rather than sending an email for each invoice that needs to be sorted, we send a single email with a link to the invoice list pre-configured with the filter for invoices that need to be sorted.

Conclusion

When your AI-powered feature needs to be a part of an interactive flow, such as autofilling a form based on some text, pick a smaller language model such as openai/gpt-3.5-turbo.

Otherwise, figure out a way to let the user move on while your AI does the processing. For example, a “virtual coworker” that processes emails or chat messages and lets you know if there is something you need to do. You can notify the user later if you need input from them. Consider that multiple notifications can be annoying, so try to bundle the corrections that need to be done into a single interaction.